Few people knew that the European Parliament, Council and Commission were in the process of negotiating a new legal framework for the development, commissioning and deployment of Artificial Intelligence (AI), until ChatGPT burst onto the market. Suddenly, public opinion turned its attention to the now ubiquitous generative AI, seeking to understand its potential better. It wasn’t long before concerns began to materialise, in the face of the tech industry’s reassuring rhetoric, about the impact of these new technological developments on jobs and human creation. The exponential and rapid pace of AI, and its many applications, now have many workers in the cultural sector worried, fearing for their future in the absence of a clear and binding regime aimed at putting machines at the service of humans and not replacing them. These fears now transcend our sector and are also giving rise to major concerns at societal level, as AI could be used to skew reality, spread and amplify bias and discrimination, and undermine the fundamental democratic values underpinning our societies.

It was therefore at the last minute, just as the European institutions were beginning to envisage the end of these negotiations, that the European regulation on artificial intelligence (AI) was reopened, with a view to devising standards for generative AI as well. This seemed to us to be a providential opportunity, as it is precisely this type of ‘intelligence’ that poses the most problems. Generative AI feeds on an astonishing amount of content, both protected and unprotected, as well as biometric and other data, in order to train and learn to generate synthetic content that is increasingly plausible and close to what human intelligence could conceive. In essence, AI must draw its inspiration from databases containing, in particular, the works of creators, their voices, their images and other personal data (what we call the “input”) to finally produce something (often called the “output”) that is extremely realistic, that could well suggest that it is the creators themselves or the fruit of their creative work. This synthetic ‘output’, which is often very difficult to detect, can therefore enter into direct competition with human work and even have disastrous consequences for the reputation of artists and other creators in the eyes of a public fooled by the realism of this content.

From a regulatory point of view, two courses of action are needed: the first is to prohibit or at least hinder the collection and extraction of protected content and biometric data without prior informed consent and compensation in order to feed generative AI. Secondly, it is also necessary to impose maximum transparency on synthetic content, i.e. content produced or modified by generative AI.

It is therefore a courageous and strong regulation both from the input and output points of view that we would have hoped for from the last part of the negotiations on the AI Act. However, the Commission made it clear from the outset that this regulation was not intended to amend the current rules on intellectual property, in particular those established by Directive 2019/790 on Copyright and Related Rights in the Digital Single Market, or the European Regulation on Personal Data Protection (RGPD). As a result, the section on generative AI opened on less than favourable terms towards the end, under the pressure of the imminent European elections requiring rapid finalisation, and facing the reluctance of certain Member States, notably France and Germany, to extend the scope of this text to generative AI.

Indeed, neither the CDSM Directive nor the RGPD are equipped to adequately protect creators against the extraction of their works and data by generative AI. The first, mainly because of the exception for text and data mining (Article 4 of Directive 2019/790) and the more than hypothetical possibility for artists to reserve their rights. Secondly, because of the lack of transparency and opacity of generative AI models, as well as contractual practices that often make artists’ “consent” to the exploitation of their biometric data a condition for securing an employment contract.

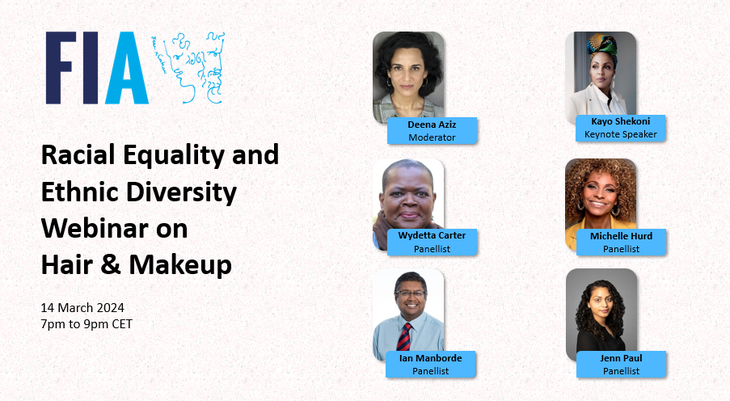

Faced with these constraints, FIA, together with 12 other creators’ organisations, including journalists, composers, musicians, scriptwriters, directors, translators, voice artists and others, had to opt for a more restricted approach aimed at promoting maximum transparency, from both input and output points of view. This would mean that creators could be fully informed of the data extracted by the AI, while consumers could also be aware of the use of generative AI in the content to which they have access.

The end result, while a step in the right direction, falls well short of our expectations: the recently adopted regulation categorises AI according to the different degrees of risk that these applications pose to the health and safety of individuals and our societies. Those deemed to involve unacceptable risks are now banned. High-risk applications, on the other hand, have to comply with strict obligations in terms of security, transparency and quality, while those with a lower risk factor only have to comply with transparency requirements. The concerns of creators, however, have been well and truly ignored.

It is true that the labelling requirements for content produced or altered by artificial intelligence have been tightened, and that a sweeping exemption provided for in the initial proposal for such alterations in the name of ‘freedom of expression’ or ‘freedom of the arts and sciences’ has been removed. The audiovisual sector is not exempt from these rules, although transparency obligations can be put in place so as not to hamper the presentation or enjoyment of the work.

But where this law is particularly disappointing is in relation to the extraction of personal data and copyrighted content for the purposes of training generative AI models: providers of such models must now establish a policy to comply with EU copyright law and undertake to identify and respect any reservation of rights made under the text and data mining exception. They must also draw up and make accessible to the public a “sufficiently detailed summary” of the content used to train the model.

It is clear that this arrangement will not allow artists to achieve the level of detail necessary to understand the extent to which their works and personal data are used to train generative artificial intelligence. In the absence of evidence, it will be very difficult for them to assert their right of reservation. This right, moreover, is poorly defined as to how it may be exercised in relation to AI. What’s more, artists, who in most cases assign their rights to producers, would probably not be able to invoke it anyway. As for the GDPR, it does not seem to be of much use in this context, due to the lack of detailed information, dubious contractual practices or simply the huge volume of personal and biometric data extracted by generative artificial intelligence for training purposes, irrespective of informing or obtaining the prior consent of the people concerned.

The regulation, which has not yet been published in the Official Journal, will require a large number of implementing measures, including models and codes of conduct drawn up by a specialised unit of the European Commission (the IA Office), in consultation with stakeholders. This leaves some room for improvement. The next challenge, however, will be to obtain an update of the CDSM directive and the RGPD from the next Commission, which will be no easy task given the concerns about revisiting these regulatory texts, which were already the subject of intense lobbying and hard-won compromises.

On 25 April 2024, FIA and 12 other authors’, performers’ & creative workers’ organisations published a joint statement available for download hereunder: